Backdoors in Machine Learning Models

Interest in machine learning has grown incredibly quickly over the past 20 years due to major advances in speech recognition and automatic text translation. Recent developments (such as generating text and images, as well as solving mathematical problems) have shown the potential of learning systems. Because of these advances, machine learning is also increasingly used in safety-critical applications. In autonomous driving, for example, or in access systems that evaluate biometric characteristics. Machine learning is never error-free, however, and wrong decisions can sometimes lead to life-threatening situations. The limitations of machine learning are very well known and are usually taken into account when developing and integrating machine learning models. For a long time, however, less attention has been paid to what happens when someone tries to manipulate the model intentionally.

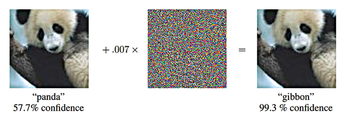

Adversarial Examples

Experts have raised the alarm about the possibility of adversarial examples [1] – specifically manipulated images that can fool even state-of-the-art image recognition systems (Figure 1). In the most dangerous case, people cannot even perceive a difference between the adversarial example and the original image from which it was computed. The model correctly identifies the original, but it fails to correctly classify the adversial example. Even the category in which you want the adversial example to be erroneously classified can be predetermined. Developments [2] in adversarial examples have shown that you can also manipulate the texture of objects in our reality such that a model misclassifies the manipulated objects – even when viewed from different directions and distances.

[...]

Buy this article as PDF

(incl. VAT)