Make a camera for lenticular photography

Wiggle Time

© Lead Image © 3355m, 123RF.com

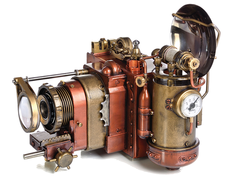

You can take lenticular images with a homemade camera to re-create the "wiggle" pictures of your childhood.

Lenticular images store multiple exposures in the same area. Animation is achieved by tilting the image. Another application creates a spatial appearance without special tools (autostereoscopy). The digital version of this often shows up on social media as a "wigglegram."

Lenticular Cameras

On the consumer market, lenticular cameras are sold under the name ActionSampler. More than 40 years ago, the four-lens Nishika (Nimslo) appeared, followed by Fuji's eight-lens Rensha Cardia in 1991. Unlike the Nishika's synchronous shutter action, the Fuji exposed the 35mm film sequentially. Even today, the analog scenes are still very popular on Instagram and the like.

One way of creating a multilens digital recording system is to use a Raspberry Pi and a Camarray HAT [1] (hardware attached on top) by ArduCam [2]. The camera I make in this article uses four Sony IMX519 sensors arranged at a distance of 4cm apart (Figure 1). After the first exposure, you can move the device by half the camera distance, which produces eight shots of a subject at equal distances with a total of 32 megapixels (MP).

[...]

Buy this article as PDF

(incl. VAT)