Let an AI chatbot do the work

Prompts and Completions

This is where the actual web access takes place. Line 24 creates a structure of the type CompletionRequest and stores the request's text in its Prompt attribute. The MaxTokens parameter sets how deep the speech processor should dive into the text. The price list on OpenAI.com has a somewhat nebulous definition of what a token is [6]. Allegedly, 1,000 tokens are equivalent to about 750 words in a text; in subscription mode, the customer pays two cents per thousand tokens.

The value for MaxTokens refers to both the question and the answer. If you use too many tokens, you will quickly reach the limit in free mode. However, if you set the value for MaxTokens too low, only part of the answer will be returned for longer output (for example, automatically written newspaper articles). The value of 1,000 tokens set in line 26 will work well for typical use cases.

The value for Temperature in line 27 indicates how hotheaded you want the chatbot's answer to be. A value higher than causes the responses to vary, even at the expense of accuracy – but more on that later. The actual request to the API server is made by the CompletionStreamWithEngine function. Its last parameter is a callback function that the client will call whenever response packets arrive from the server. Line 32 simply prints the results on the standard output.

Forever Bot

To implement a chatbot that endlessly fields questions and outputs one answer at a time, Listing 2 wraps a text scanner listening on standard input in an infinite for loop and calls the function printResp() for each typed question in line 16. The function contacts the OpenAI server and then prints its text response on the standard output. Then the program jumps back to the beginning of the infinite loop and waits for the next question to be entered.

Listing 2

chat.go

01 package main

02 import (

03 "bufio"

04 "fmt"

05 "os"

06 )

07 func main() {

08 ai := NewAI()

09 ai.init()

10 scanner := bufio.NewScanner(os.Stdin)

11 for {

12 fmt.Print("Ask: ")

13 if !scanner.Scan() {

14 break

15 }

16 ai.printResp(scanner.Text())

17 }

18 }

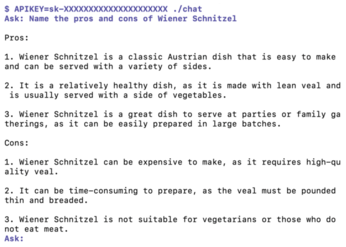

Figure 4 shows the output of the interactive chat program that fields the user's question on the terminal, sends it to the OpenAI server for a response, and prints its response to the standard output. The bot waits for the Enter key to confirm sending a question, prints the incoming answer, and jumps to the next input prompt. Pressing Ctrl+D or Ctrl+C terminates the program.

It is important to install the API token in the APIKEY environment variable in the called program's environment; otherwise, the program will abort with an error message. In Figure 4, the user asks the AI model about the advantages and disadvantages of Wiener schnitzel [7] and receives three pro and con points each as an answer. It turns out that the electronic brain has amazingly precise knowledge of everything that can be found somewhere on the Internet (and preferably on Wikipedia). It is actually capable of analyzing this content semantically, storing it in machine readable form, and answering even the most abstruse questions about Wiener schnitzel in a meaningful way. The chat binary with the ready-to-run chatbot is generated from the source code in the listings as usual with the three standard commands shown in Listing 3.

Listing 3

Creating the Binary

$ go mod init chat $ go mod tidy $ go build chat.go openai.go

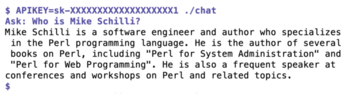

By the way, I've found that the AI system's knowledge is not always completely correct. In the answer to the question about who the author of this column is, it lists two Perl books that I have never written (Figure 5). For reference, the correct titles would have been Perl Power and Go To Perl 5. More importantly, there's no good way to find out what's right and what's wrong, because the bot never provides any references on how it arrived at a particular answer.

Setting the Creative Temperature

Programs can also ask the API to increase the variety of completions provided by the back end. Do you want the answers be very precise or do you prefer to have several varying answers to the same question in a more playful way, even at the risk of them being not 100 percent accurate?

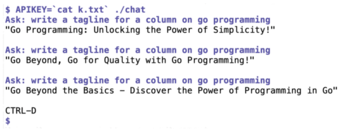

This behavior is controlled by the Temperature parameter in line 27 of Listing 1. With a value of (gpt3.Float32Ptr(0)), the AI robotically gives the same precise answers every time. But even with as low a value as 1, things start to liven up. The AI constantly rewords things and comes up with interesting new variations. At the maximum value of 2, however, you get the feeling that the AI is somewhat incapacitated, causing it to output slurred nonsense with partly incorrect grammar. In Figure 6, the robot is asked to invent a new tagline for the Go programming column. With a temperature setting of 1, it provides several surprisingly good suggestions.

« Previous 1 2 3 Next »

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

So Long Neofetch and Thanks for the Info

Today is a day that every Linux user who enjoys bragging about their system(s) will mourn, as Neofetch has come to an end.

-

Ubuntu 24.04 Comes with a “Flaw"

If you're thinking you might want to upgrade from your current Ubuntu release to the latest, there's something you might want to consider before doing so.

-

Canonical Releases Ubuntu 24.04

After a brief pause because of the XZ vulnerability, Ubuntu 24.04 is now available for install.

-

Linux Servers Targeted by Akira Ransomware

A group of bad actors who have already extorted $42 million have their sights set on the Linux platform.

-

TUXEDO Computers Unveils Linux Laptop Featuring AMD Ryzen CPU

This latest release is the first laptop to include the new CPU from Ryzen and Linux preinstalled.

-

XZ Gets the All-Clear

The back door xz vulnerability has been officially reverted for Fedora 40 and versions 38 and 39 were never affected.

-

Canonical Collaborates with Qualcomm on New Venture

This new joint effort is geared toward bringing Ubuntu and Ubuntu Core to Qualcomm-powered devices.

-

Kodi 21.0 Open-Source Entertainment Hub Released

After a year of development, the award-winning Kodi cross-platform, media center software is now available with many new additions and improvements.

-

Linux Usage Increases in Two Key Areas

If market share is your thing, you'll be happy to know that Linux is on the rise in two areas that, if they keep climbing, could have serious meaning for Linux's future.

-

Vulnerability Discovered in xz Libraries

An urgent alert for Fedora 40 has been posted and users should pay attention.