Secure storage with GlusterFS

Bright Idea

You can create distributed, replicated, and high-performance storage systems using GlusterFS and some inexpensive hardware. Kurt explains.

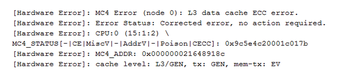

Recently I had a CPU cache memory error pop up in my file server logfile (Figure 1). I don't know if that means the CPU is failing, or if it got hit by a cosmic ray, or if something else happened, but now I wonder: Can I really trust this hardware with critical services anymore? When it comes to servers, the failure of a single component, or even a single system, should not take out an entire service.

In other words, I'm doing it wrong by relying on a single server to provide my file-serving needs. Even though I have the disks configured in a mirrored RAID array, this won't help if the CPU goes flaky and dies; I'll still have to build a new server, and move the drives over, and hope that no data was corrupted. Now imagine that this isn't my personal file server but the back-end file server for your system boot images and partitions (because you're using KVM, RHEV, OpenStack, or something similar). In this case, the failure of a single server could bring a significant portion of your infrastructure to its knees. Thus, the availability aspect of the security triad (i.e., Availability, Integrity, and Confidentiality, or AIC) was not properly addressed, and now you have to deal with a lot of angry users and managers.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Fedora Continues 32-Bit Support

In a move that should come as a relief to some portions of the Linux community, Fedora will continue supporting 32-bit architecture.

-

Linux Kernel 6.17 Drops bcachefs

After a clash over some late fixes and disagreements between bcachefs's lead developer and Linus Torvalds, bachefs is out.

-

ONLYOFFICE v9 Embraces AI

Like nearly all office suites on the market (except LibreOffice), ONLYOFFICE has decided to go the AI route.

-

Two Local Privilege Escalation Flaws Discovered in Linux

Qualys researchers have discovered two local privilege escalation vulnerabilities that allow hackers to gain root privileges on major Linux distributions.

-

New TUXEDO InfinityBook Pro Powered by AMD Ryzen AI 300

The TUXEDO InfinityBook Pro 14 Gen10 offers serious power that is ready for your business, development, or entertainment needs.

-

Danish Ministry of Digital Affairs Transitions to Linux

Another major organization has decided to kick Microsoft Windows and Office to the curb in favor of Linux.

-

Linux Mint 20 Reaches EOL

With Linux Mint 20 at its end of life, the time has arrived to upgrade to Linux Mint 22.

-

TuxCare Announces Support for AlmaLinux 9.2

Thanks to TuxCare, AlmaLinux 9.2 (and soon version 9.6) now enjoys years of ongoing patching and compliance.

-

Go-Based Botnet Attacking IoT Devices

Using an SSH credential brute-force attack, the Go-based PumaBot is exploiting IoT devices everywhere.

-

Plasma 6.5 Promises Better Memory Optimization

With the stable Plasma 6.4 on the horizon, KDE has a few new tricks up its sleeve for Plasma 6.5.