Go retrieves GPS data from the komoot app

As we all know, the past year and a half were completely lost to the pandemic. Because of various lockdowns, there wasn't much left to do in your leisure time in terms of outdoor activity. Playing soccer was prohibited and jogging with a face covering too strenuous. This prompted my wife and me to begin exploring areas of our adopted city, San Francisco. We hiked hidden pathways, previously unknown to us, every evening on hour-long neighborhood walks. To our amazement, we discovered that even 25 years of living in a city is not enough to explore every last corner. We found countless little hidden stairways, unpaved paths, and sights completely unknown to major travel guides.

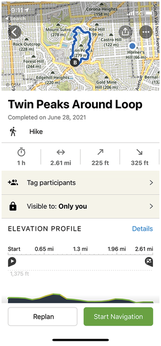

Memorizing all the turnoffs for these newly invented, winding city hiking trails is almost impossible, but fortunately a mobile phone can step in as a brain extension here. Hiking apps plan your tours, record your progress during the walk, display traveled paths on a online map, and allow for sharing completed tours with friends (Figure 1). One of the best-known hiking (and biking) apps is the commercial komoot, which is based on OpenStreetMap data and remains free of charge as long as the user gets invited by another (even new) user and limits themselves to one local hiking area.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Parrot OS Switches to KDE Plasma Desktop

Yet another distro is making the move to the KDE Plasma desktop.

-

TUXEDO Announces Gemini 17

TUXEDO Computers has released the fourth generation of its Gemini laptop with plenty of updates.

-

Two New Distros Adopt Enlightenment

MX Moksha and AV Linux 25 join ranks with Bodhi Linux and embrace the Enlightenment desktop.

-

Solus Linux 4.8 Removes Python 2

Solus Linux 4.8 has been released with the latest Linux kernel, updated desktops, and a key removal.

-

Zorin OS 18 Hits over a Million Downloads

If you doubt Linux isn't gaining popularity, you only have to look at Zorin OS's download numbers.

-

TUXEDO Computers Scraps Snapdragon X1E-Based Laptop

Due to issues with a Snapdragon CPU, TUXEDO Computers has cancelled its plans to release a laptop based on this elite hardware.

-

Debian Unleashes Debian Libre Live

Debian Libre Live keeps your machine free of proprietary software.

-

Valve Announces Pending Release of Steam Machine

Shout it to the heavens: Steam Machine, powered by Linux, is set to arrive in 2026.

-

Happy Birthday, ADMIN Magazine!

ADMIN is celebrating its 15th anniversary with issue #90.

-

Another Linux Malware Discovered

Russian hackers use Hyper-V to hide malware within Linux virtual machines.