Getting started with the ELK Stack monitoring solution

Elk Hunting

© Photo by David Santoyo on Unsplash

ELK Stack is a powerful monitoring system known for efficient log management and versatile visualization. This hands-on workshop will help you take your first steps with setting up your own ELK Stack monitoring solution.

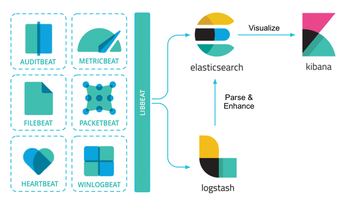

Today's networks require a monitoring solution with industrial-strength log management and analytics. One option that has gained popularity in recent years is ELK stack [1]. The free and open source ELK Stack collection is maintained by a company called Elastic. (According to the website, the company has recently changed the name of the project to Elastic Stack, but the previous name is still in common usage.) ELK Stack is not a single tool but a collection of tools (Figure 1). The ELK acronym highlights the importance of the collection's three most important utilities. At the heart of the stack, Elasticsearch collects and maintains data, providing an engine, based on Apache Lucene, for searching through it. Logstash serves as the log processing pipeline, collecting data from a multitude of sources, transforming it, then sending it to a chosen "stash." (Keep in mind that, despite its name, Logstash itself does not preserve any data.) Kibana provides a user-friendly interface for querying and visualizing the data.

A bundle of tiny apps called beats specialize in collecting data and feeding it to Logstash or Elasticsearch. The beats include:

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

Parrot OS Switches to KDE Plasma Desktop

Yet another distro is making the move to the KDE Plasma desktop.

-

TUXEDO Announces Gemini 17

TUXEDO Computers has released the fourth generation of its Gemini laptop with plenty of updates.

-

Two New Distros Adopt Enlightenment

MX Moksha and AV Linux 25 join ranks with Bodhi Linux and embrace the Enlightenment desktop.

-

Solus Linux 4.8 Removes Python 2

Solus Linux 4.8 has been released with the latest Linux kernel, updated desktops, and a key removal.

-

Zorin OS 18 Hits over a Million Downloads

If you doubt Linux isn't gaining popularity, you only have to look at Zorin OS's download numbers.

-

TUXEDO Computers Scraps Snapdragon X1E-Based Laptop

Due to issues with a Snapdragon CPU, TUXEDO Computers has cancelled its plans to release a laptop based on this elite hardware.

-

Debian Unleashes Debian Libre Live

Debian Libre Live keeps your machine free of proprietary software.

-

Valve Announces Pending Release of Steam Machine

Shout it to the heavens: Steam Machine, powered by Linux, is set to arrive in 2026.

-

Happy Birthday, ADMIN Magazine!

ADMIN is celebrating its 15th anniversary with issue #90.

-

Another Linux Malware Discovered

Russian hackers use Hyper-V to hide malware within Linux virtual machines.