Secure storage with GlusterFS

Bright Idea

You can create distributed, replicated, and high-performance storage systems using GlusterFS and some inexpensive hardware. Kurt explains.

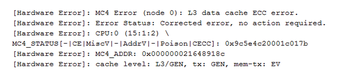

Recently I had a CPU cache memory error pop up in my file server logfile (Figure 1). I don't know if that means the CPU is failing, or if it got hit by a cosmic ray, or if something else happened, but now I wonder: Can I really trust this hardware with critical services anymore? When it comes to servers, the failure of a single component, or even a single system, should not take out an entire service.

In other words, I'm doing it wrong by relying on a single server to provide my file-serving needs. Even though I have the disks configured in a mirrored RAID array, this won't help if the CPU goes flaky and dies; I'll still have to build a new server, and move the drives over, and hope that no data was corrupted. Now imagine that this isn't my personal file server but the back-end file server for your system boot images and partitions (because you're using KVM, RHEV, OpenStack, or something similar). In this case, the failure of a single server could bring a significant portion of your infrastructure to its knees. Thus, the availability aspect of the security triad (i.e., Availability, Integrity, and Confidentiality, or AIC) was not properly addressed, and now you have to deal with a lot of angry users and managers.

[...]

Buy this article as PDF

(incl. VAT)

Buy Linux Magazine

Subscribe to our Linux Newsletters

Find Linux and Open Source Jobs

Subscribe to our ADMIN Newsletters

Support Our Work

Linux Magazine content is made possible with support from readers like you. Please consider contributing when you’ve found an article to be beneficial.

News

-

New Linux Botnet Discovered

The SSHStalker botnet uses IRC C2 to control systems via legacy Linux kernel exploits.

-

The Next Linux Kernel Turns 7.0

Linus Torvalds has announced that after Linux kernel 6.19, we'll finally reach the 7.0 iteration stage.

-

Linux From Scratch Drops SysVinit Support

LFS will no longer support SysVinit.

-

LibreOffice 26.2 Now Available

With new features, improvements, and bug fixes, LibreOffice 26.2 delivers a modern, polished office suite without compromise.

-

Linux Kernel Project Releases Project Continuity Document

What happens to Linux when there's no Linus? It's a question many of us have asked over the years, and it seems it's also on the minds of the Linux kernel project.

-

Mecha Systems Introduces Linux Handheld

Mecha Systems has revealed its Mecha Comet, a new handheld computer powered by – you guessed it – Linux.

-

MX Linux 25.1 Features Dual Init System ISO

The latest release of MX Linux caters to lovers of two different init systems and even offers instructions on how to transition.

-

Photoshop on Linux?

A developer has patched Wine so that it'll run specific versions of Photoshop that depend on Adobe Creative Cloud.

-

Linux Mint 22.3 Now Available with New Tools

Linux Mint 22.3 has been released with a pair of new tools for system admins and some pretty cool new features.

-

New Linux Malware Targets Cloud-Based Linux Installations

VoidLink, a new Linux malware, should be of real concern because of its stealth and customization.